AI tools for trustworthy AI development for healthcare applications

Related Tools:

ZS Insights

The website is a platform offering generative AI insights, technology, and applications for enterprise solutions. It provides expertise and perspectives to create impactful solutions across various industries such as healthcare, pharmaceuticals, biotech, and more. The platform offers end-to-end solutions for healthcare R&D innovation, digital health, and personalized buying experiences. It also focuses on AI & Analytics, digital transformation, life sciences R&D, marketing strategies, sales effectiveness, and value strategy. The website aims to help businesses leverage generative AI to drive productivity, make better decisions, and achieve tangible outcomes.

IQVIA Healthcare-grade AI

IQVIA is a healthcare-grade AI tool that connects data, technology, and analytics to address the unique needs of healthcare. It offers solutions in research & development, real world evidence, commercialization, safety & regulatory compliance, and technologies. IQVIA AI is trained on high-quality data and deep domain expertise to generate reliable insights for the industry. The AI Assistant enables users to interact with solutions and products, providing faster decision-making and trustworthy results.

Trustworthy AI

Trustworthy AI is a business guide that focuses on navigating trust and ethics in artificial intelligence. Authored by Beena Ammanath, a global thought leader in AI ethics, the book provides practical guidelines for organizations developing or using AI solutions. It addresses the importance of AI systems adhering to social norms and ethics, making fair decisions in a consistent, transparent, explainable, and unbiased manner. Trustworthy AI offers readers a structured approach to thinking about AI ethics and trust, emphasizing the need for ethical considerations in the rapidly evolving landscape of AI technology.

Defined.ai

Defined.ai is a leading provider of high-quality and ethical data for AI applications. Founded in 2015, Defined.ai has a global presence with offices in the US, Europe, and Asia. The company's mission is to make AI more accessible and ethical by providing a marketplace for buying and selling AI data, tools, and models. Defined.ai also offers professional services to help deliver success in complex machine learning projects.

AI for Good

The website is a platform dedicated to AI for Good, focusing on advancing trustworthy AI for sustainable development. It provides information on AI events, skills, standards, governance, and initiatives related to AI for sustainable development. The platform aims to bring diverse voices together to harness AI's potential for inclusive and sustainable development worldwide.

Future AGI

Future AGI is a revolutionary AI data management platform that aims to achieve 99% accuracy in AI applications across software and hardware. It provides a comprehensive evaluation and optimization platform for enterprises to enhance the performance of their AI models. Future AGI offers features such as creating trustworthy, accurate, and responsible AI, 10x faster processing, generating and managing diverse synthetic datasets, testing and analyzing agentic workflow configurations, assessing agent performance, enhancing LLM application performance, monitoring and protecting applications in production, and evaluating AI across different modalities.

Coach

Coach is an AI-powered career development platform designed for learners, job seekers, and career shifters. It offers personalized career guidance, resources for skills development, and assistance in exploring various career paths. Built by CareerVillage and a coalition of educators and nonprofits, Coach aims to provide trustworthy AI career advising and support for individuals at every stage of their career journey.

Salieri

Salieri is a multi-agent LLM home multiverse platform that offers an efficient, trustworthy, and automated AI workflow. The innovative Multiverse Factory allows developers to elevate their projects by generating personalized AI applications through an intuitive interface. The platform aims to optimize user queries via LLM API calls, reduce expenses, and enhance the cognitive functions of AI agents. Salieri's team comprises experts from top AI institutes like MIT and Google, focusing on generative AI, neural knowledge graph, and composite AI models.

Arro

Arro is an AI-powered research assistant that helps product teams collect customer insights at scale. It uses automated conversations to conduct user interviews with thousands of customers simultaneously, generating product opportunities that can be directly integrated into the product roadmap. Arro's innovative AI-led methodology combines the depth of user interviews with the speed and scale of surveys, enabling product teams to gain a comprehensive understanding of their customers' needs and preferences.

Microsoft Responsible AI Toolbox

Microsoft Responsible AI Toolbox is a suite of tools designed to assess, develop, and deploy AI systems in a safe, trustworthy, and ethical manner. It offers integrated tools and functionalities to help operationalize Responsible AI in practice, enabling users to make user-facing decisions faster and easier. The Responsible AI Dashboard provides a customizable experience for model debugging, decision-making, and business actions. With a focus on responsible assessment, the toolbox aims to promote ethical AI practices and transparency in AI development.

Appen

Appen is a leading provider of high-quality data for training AI models. The company's end-to-end platform, flexible services, and deep expertise ensure the delivery of high-quality, diverse data that is crucial for building foundation models and enterprise-ready AI applications. Appen has been providing high-quality datasets that power the world's leading AI models for decades. The company's services enable it to prepare data at scale, meeting the demands of even the most ambitious AI projects. Appen also provides enterprises with software to collect, curate, fine-tune, and monitor traditionally human-driven tasks, creating massive efficiencies through a trustworthy, traceable process.

Human-Centred Artificial Intelligence Lab

The Human-Centred Artificial Intelligence Lab (Holzinger Group) is a research group focused on developing AI solutions that are explainable, trustworthy, and aligned with human values, ethical principles, and legal requirements. The lab works on projects related to machine learning, digital pathology, interactive machine learning, and more. Their mission is to combine human and computer intelligence to address pressing problems in various domains such as forestry, health informatics, and cyber-physical systems. The lab emphasizes the importance of explainable AI, human-in-the-loop interactions, and the synergy between human and machine intelligence.

Catty.AI

Catty.AI is an AI-driven platform that provides personalized and interactive learning experiences for children aged 2-12. It offers a wide range of captivating topics, including science, history, mathematics, and more, presented through engaging fairytales, illustrations, and narrations. Catty.AI prioritizes the well-being of children, ensuring that all content is age-appropriate, safe, and respectful of diverse cultures and beliefs.

Research Center Trustworthy Data Science and Security

The Research Center Trustworthy Data Science and Security is a hub for interdisciplinary research focusing on building trust in artificial intelligence, machine learning, and cyber security. The center aims to develop trustworthy intelligent systems through research in trustworthy data analytics, explainable machine learning, and privacy-aware algorithms. By addressing the intersection of technological progress and social acceptance, the center seeks to enable private citizens to understand and trust technology in safety-critical applications.

Google Public Policy

Google Public Policy is a website dedicated to showcasing Google's public policy initiatives and priorities. It provides information on various topics such as consumer choice, economic opportunity, privacy, responsible AI, security, sustainability, and trustworthy information & content. The site highlights Google's efforts in advancing bold and responsible AI, strengthening security, and promoting a more sustainable future. It also features news updates, research briefs, and collaborations with organizations to address societal challenges through technology and innovation.

Chatbase

Chatbase is a platform that allows users to create custom chatbots for their websites. These chatbots can be used for a variety of purposes, including customer support, lead generation, and user engagement. Chatbase provides a variety of features to help users create and customize their chatbots, including the ability to import data from multiple sources, customize the chatbot's appearance and behavior, and integrate with other tools. Chatbase also offers a variety of pre-built templates and examples to help users get started.

Czat.ai

Czat.ai is a Polish chat application powered by artificial intelligence that aims to provide answers to any question, solve problems, and help users achieve their goals. With over 25 expert companions available, users can engage in conversations on a wide range of topics, from career advice to personal development and even entertainment. The application offers practical solutions, quick responses, and creative ideas to assist users in their daily lives. It also emphasizes simplicity, accessibility, and user-friendly interactions, making advanced AI technology available to everyone.

Gastrograph AI

Gastrograph AI is a cutting-edge artificial intelligence platform that empowers food and beverage companies to optimize their products for consistent market success. Leveraging the world's largest sensory database, Gastrograph AI provides deep insights into consumer preferences, enabling companies to develop new products, enter new markets, and optimize existing products with confidence. With Gastrograph AI, companies can reduce time to market costs, simplify product development, and gain access to trustworthy insights, leading to measurable results and a competitive edge in the global marketplace.

Play2Learn

Play2Learn is an AI-powered learning platform that revolutionizes training and lifelong learning through gamification. It offers a suite of tailored games that foster skill development, virtual empowerment, and real-time insights. The platform leverages AI tools for seamless customization, creating immersive scenarios that test player knowledge through relatable analogies. With its intuitive dashboard, Play2Learn provides detailed metrics to identify knowledge gaps and make informed decisions. The platform's gamified approach engages players with levels, scores, and badges, encouraging active participation and knowledge retention.

awesome-ai-summerschool

This repository contains a comprehensive list of various summer schools and winter schools in the field of artificial intelligence, machine learning, medical imaging, and healthcare. It provides detailed information about upcoming events, including the name, venue, date, deadline, organizers, fees, and scholarship details. The repository aims to share opportunities with the community and aspiring AI researchers/engineers, data scientists.

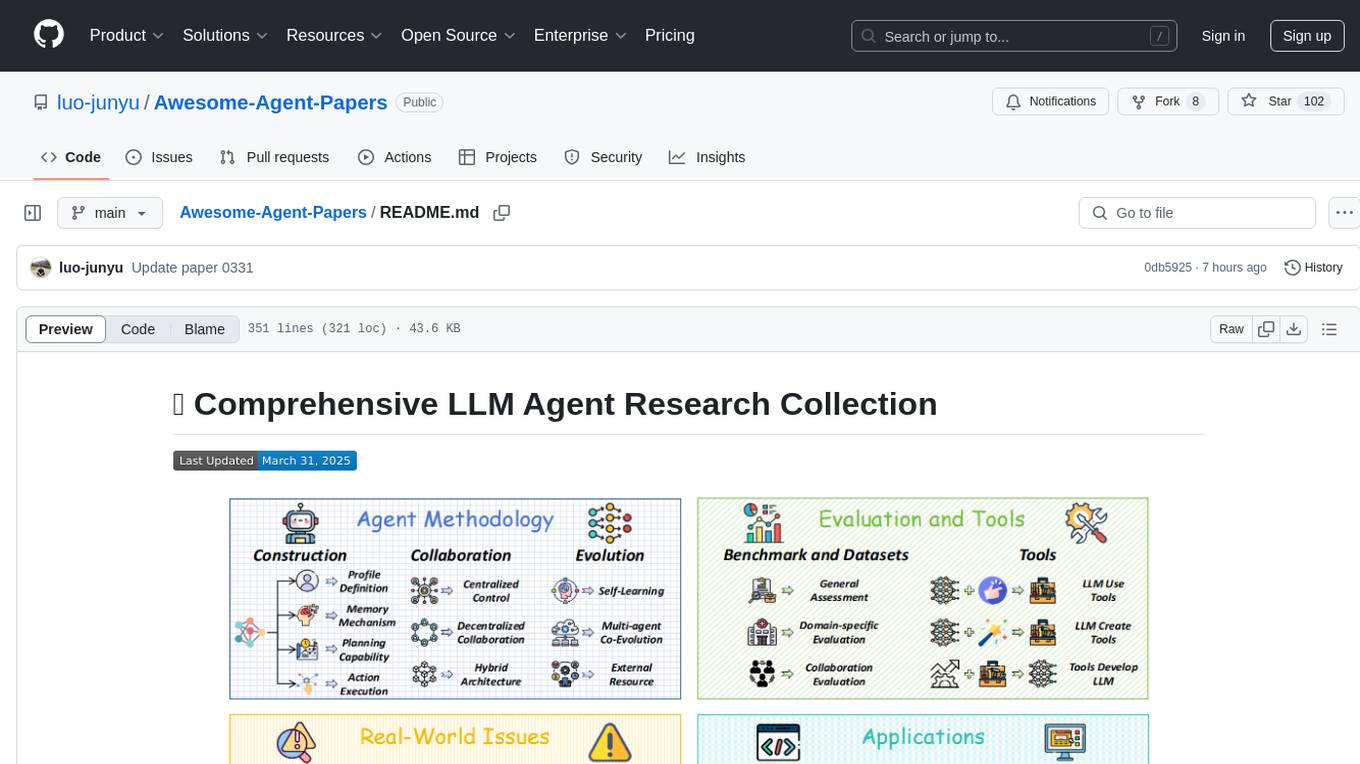

Awesome-Agent-Papers

This repository is a comprehensive collection of research papers on Large Language Model (LLM) agents, organized across key categories including agent construction, collaboration mechanisms, evolution, tools, security, benchmarks, and applications. The taxonomy provides a structured framework for understanding the field of LLM agents, bridging fragmented research threads by highlighting connections between agent design principles and emergent behaviors.

LLM-Agents-Papers

A repository that lists papers related to Large Language Model (LLM) based agents. The repository covers various topics including survey, planning, feedback & reflection, memory mechanism, role playing, game playing, tool usage & human-agent interaction, benchmark & evaluation, environment & platform, agent framework, multi-agent system, and agent fine-tuning. It provides a comprehensive collection of research papers on LLM-based agents, exploring different aspects of AI agent architectures and applications.

SurveyX

SurveyX is an advanced academic survey automation system that leverages Large Language Models (LLMs) to generate high-quality, domain-specific academic papers and surveys. Users can request comprehensive academic papers or surveys tailored to specific topics by providing a paper title and keywords for literature retrieval. The system streamlines academic research by automating paper creation, saving users time and effort in compiling research content.

interpret

InterpretML is an open-source package that incorporates state-of-the-art machine learning interpretability techniques under one roof. With this package, you can train interpretable glassbox models and explain blackbox systems. InterpretML helps you understand your model's global behavior, or understand the reasons behind individual predictions. Interpretability is essential for: - Model debugging - Why did my model make this mistake? - Feature Engineering - How can I improve my model? - Detecting fairness issues - Does my model discriminate? - Human-AI cooperation - How can I understand and trust the model's decisions? - Regulatory compliance - Does my model satisfy legal requirements? - High-risk applications - Healthcare, finance, judicial, ...

ai-collection

The ai-collection repository is a collection of various artificial intelligence projects and tools aimed at helping developers and researchers in the field of AI. It includes implementations of popular AI algorithms, datasets for training machine learning models, and resources for learning AI concepts. The repository serves as a valuable resource for anyone interested in exploring the applications of artificial intelligence in different domains.

kaapana

Kaapana is an open-source toolkit for state-of-the-art platform provisioning in the field of medical data analysis. The applications comprise AI-based workflows and federated learning scenarios with a focus on radiological and radiotherapeutic imaging. Obtaining large amounts of medical data necessary for developing and training modern machine learning methods is an extremely challenging effort that often fails in a multi-center setting, e.g. due to technical, organizational and legal hurdles. A federated approach where the data remains under the authority of the individual institutions and is only processed on-site is, in contrast, a promising approach ideally suited to overcome these difficulties. Following this federated concept, the goal of Kaapana is to provide a framework and a set of tools for sharing data processing algorithms, for standardized workflow design and execution as well as for performing distributed method development. This will facilitate data analysis in a compliant way enabling researchers and clinicians to perform large-scale multi-center studies. By adhering to established standards and by adopting widely used open technologies for private cloud development and containerized data processing, Kaapana integrates seamlessly with the existing clinical IT infrastructure, such as the Picture Archiving and Communication System (PACS), and ensures modularity and easy extensibility.

Awesome-Model-Merging-Methods-Theories-Applications

A comprehensive repository focusing on 'Model Merging in LLMs, MLLMs, and Beyond', providing an exhaustive overview of model merging methods, theories, applications, and future research directions. The repository covers various advanced methods, applications in foundation models, different machine learning subfields, and tasks like pre-merging methods, architecture transformation, weight alignment, basic merging methods, and more.

llms-txt-hub

The llms.txt hub is a centralized repository for llms.txt implementations and resources, facilitating interactions between LLM-powered tools and services with documentation and codebases. It standardizes documentation access, enhances AI model interpretation, improves AI response accuracy, and sets boundaries for AI content interaction across various projects and platforms.

ai-enablement-stack

The AI Enablement Stack is a curated collection of venture-backed companies, tools, and technologies that enable developers to build, deploy, and manage AI applications. It provides a structured view of the AI development ecosystem across five key layers: Agent Consumer Layer, Observability and Governance Layer, Engineering Layer, Intelligence Layer, and Infrastructure Layer. Each layer focuses on specific aspects of AI development, from end-user interaction to model training and deployment. The stack aims to help developers find the right tools for building AI applications faster and more efficiently, assist engineering leaders in making informed decisions about AI infrastructure and tooling, and help organizations understand the AI development landscape to plan technology adoption.

ai-agents-for-beginners

AI Agents for Beginners is a course that covers the fundamentals of building AI Agents. It consists of 10 lessons with code examples using Azure AI Foundry and GitHub Model Catalogs. The course utilizes AI Agent frameworks and services from Microsoft, such as Azure AI Agent Service, Semantic Kernel, and AutoGen. Learners can access written lessons, Python code samples, and additional learning resources for each lesson. The course encourages contributions and suggestions from the community and provides multi-language support for learners worldwide.

holisticai

Holistic AI is an open-source library dedicated to assessing and improving the trustworthiness of AI systems. It focuses on measuring and mitigating bias, explainability, robustness, security, and efficacy in AI models. The tool provides comprehensive metrics, mitigation techniques, a user-friendly interface, and visualization tools to enhance AI system trustworthiness. It offers documentation, tutorials, and detailed installation instructions for easy integration into existing workflows.

AwesomeResponsibleAI

Awesome Responsible AI is a curated list of academic research, books, code of ethics, courses, data sets, frameworks, institutes, newsletters, principles, podcasts, reports, tools, regulations, and standards related to Responsible, Trustworthy, and Human-Centered AI. It covers various concepts such as Responsible AI, Trustworthy AI, Human-Centered AI, Responsible AI frameworks, AI Governance, and more. The repository provides a comprehensive collection of resources for individuals interested in ethical, transparent, and accountable AI development and deployment.

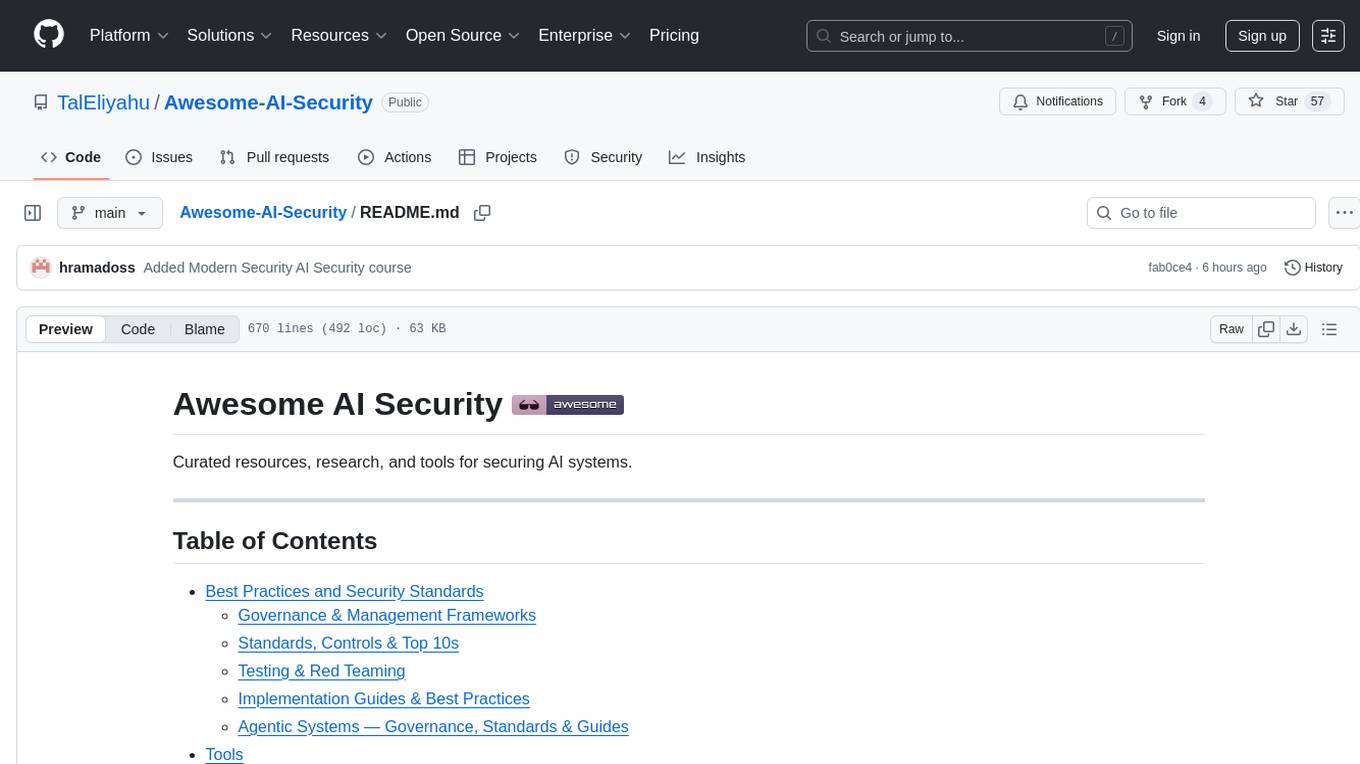

Awesome-AI-Security

Awesome-AI-Security is a curated list of resources for AI security, including tools, research papers, articles, and tutorials. It aims to provide a comprehensive overview of the latest developments in securing AI systems and preventing vulnerabilities. The repository covers topics such as adversarial attacks, privacy protection, model robustness, and secure deployment of AI applications. Whether you are a researcher, developer, or security professional, this collection of resources will help you stay informed and up-to-date in the rapidly evolving field of AI security.